Detailed Plan

To be able to rapidly fund urgent projects, consider thousands of projects each cycle, and ensure that the funded projects are community-aligned at least the following needs to be done:

- Unify and simplify participation criteria for rapid funding.

- Find a process to quickly identify broad community preferences.

- Find a process to quickly preselect proposals most aligned with the community preferences.

- Predictably allocate funding to the best-matching proposals.

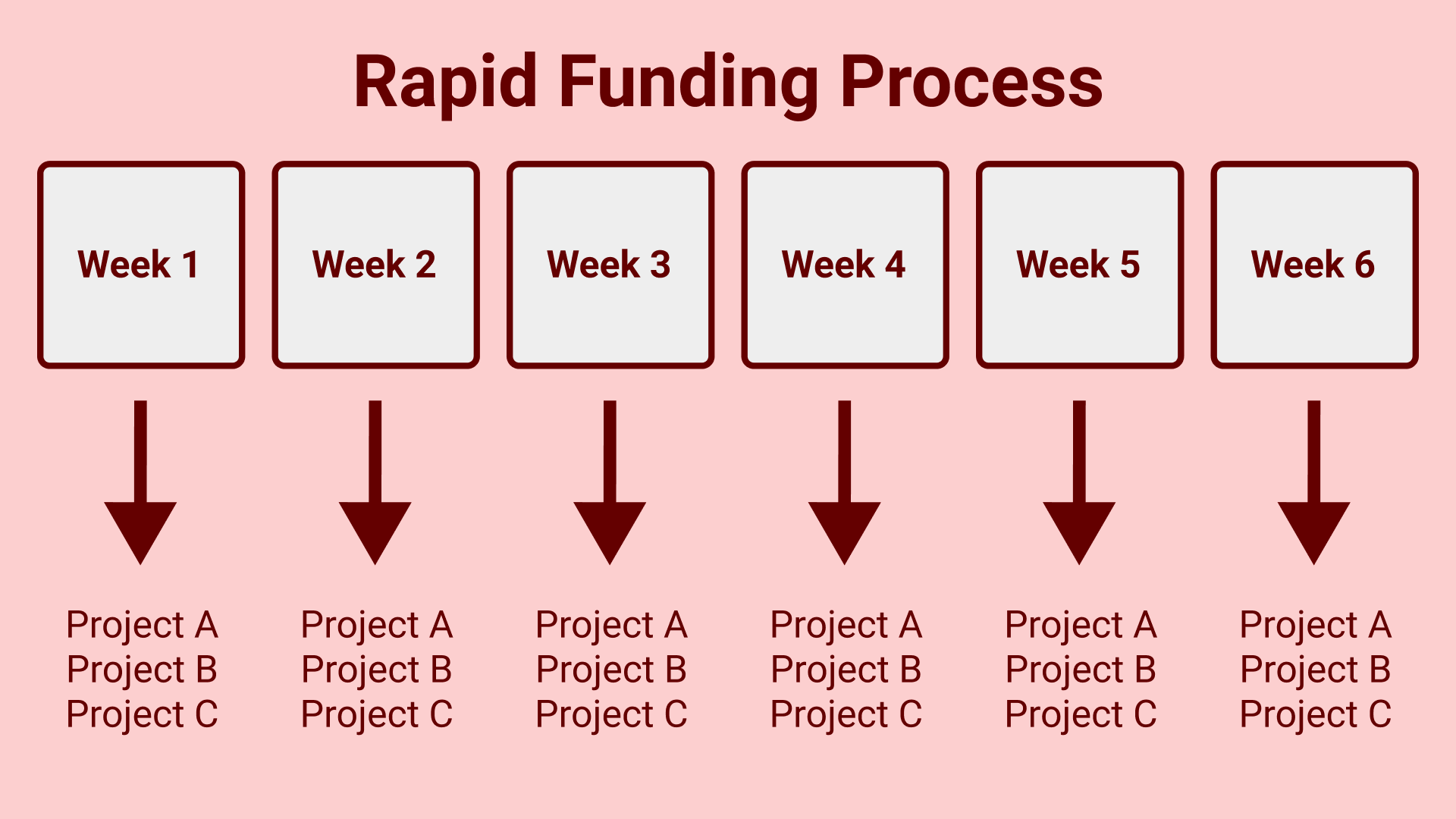

This proposal aims to provide an example implementation of a rapid-funding process capable of providing funding every week, leveraging a practical technical approach wide-spread in the domain of search engines: a pairwise comparison/ranking technology to solve the problems of identification of changing community preferences and preselecting the most-aligned proposals.

https://en.wikipedia.org/wiki/Pairwise_comparison

An example implementation can look like this

Unify and simplify the criteria for rapid funding

- Only projects that cannot wait for the full catalyst process are accepted: if funded three months later, are big (a multiple of the funding requested) opportunities lost or damages to the community created?

- Only financing till the end of the next Catalyst Cycle is requested: 2-3 months of runway.

- All projects reviewed in one pipeline, aiming to create the most value for the community from the funds available.

- All unfunded projects are considered every week.

- New projects can be added every week.

Community-preference identification and matching

- Community voting is reformulated as a comparison of two projects at a time: "Which project is better, A or B?"

- Community votes are indefinitely stored.

- Votes of each voter are used to construct a personal-preferences function (a voting agent) with the help of ML algorithms (technical details are in the end of the proposal).

- The personal-preferences function is used to rate projects not-yet-explicitly-rated by a voter.

- A joint preferences function of all voters is constructed and is used to suggest the best-matching proposals for the whole community.

A predictable process to weekly fund best-matching proposals

<u>Every Monday:</u>

- A ranking of the best-matching projects is made available.

- A team of 11 approvers is selected using a weighted random sampling without remplacement:

- wighting is done on the on funds in voting wallets to resemble Catalyst voting, so wallets with more funds have a proportionally higher chance to be selected (to prevent the attack of many new wallets with minimal funds created to skew the voting).

- each wallet can be chosen only once per cycle (to increase the representation of different opinions).

<u>During the week:</u>

- Each community member has the time to review the top-ranked projects and adjust their individual preferences if necessary.

- Selected approvers confirm/decline their participation. If a reviewer declines or does not respond within 24 hours, another one is invited until a full team of 11 approvers is gathered.

<u>On Friday:</u>

- The list of 11 best-matching projects is recomputed using the latest community preferences.

- The approvers are invited to provide their feedback on the best-matching projects.

<u>During the weekend:</u>

- The review team analyses the best-matching projects for the alignment with the rapid-funding criteria, the alignment with the community preferences, and the performance of the proposers during previous rounds of funding if any.

- Each approver makes an APPROVE/REJECT decision for each of the best-matching projects, and writes a rationale explaining the reasons behind their decisions.

- The funding available for the week is allocated in the order based on the ratio of APPROVE/REJECT votes. The be funded projects must receive more APPROVE votes than REJECT votes (simple majority).

- The information about the funded projects along with the voting data and rationales is made available to the community.

A discussion of the impact

The introduction of the concept of an ML agent represening each voter when the voter does not have time to explicitly vote unlocks the following benefits:

- Allows to create a fudning process that can operate on a weekly schedule.

- Allows the funding process to scale to thousands of of projects and beyond.

- Allows for a better alignment of funding decisions with the opinion of the whole community even when some members don't have the time to participate and review projects.

- Allows in future to optimize the Catalyst Fuding using the same fundamental concepts.

- Allows to automaticaly provide early feedback to proposers, in the form of the likelihood of the prposal to get into the top 11 projects for the review that week.

Implementation plan (tentative), deliverables, and definitions of success

Below is a high-level overview of the activities. Deeper technical explanations can be found in the section "Answers to common technical/algorithmic questions".

<u>Stage 1 - Build an MVP and present it to the community</u>

Milestone 1 (duration: 1 month): prepare the data

- Develop the code to extract metadata of historical and incoming descriptions of the proposals (text descriptions, funding requested, etc.) from Ideascale.

- Develop the code to extract historical votes to serve as supplemental data for algorithm testing.

- Deliverable 1: a dataset suitable for the development of preferences-learning ML algorithms is ready.

Milestone 2 (duration: 2 months): prepare first versions of the algorithms

- Develop a proof-of-concept implementation of the algorithm learning individual preferences of voters.

- Develop a proof-of-concept implementation of the algorithm aggregating individual preferences into the community's preferences.

- Deliverable 2: draft algorithms are published and are capable to learn from the prepared dataset and to generate individual and whole-community rankings of proposals.

Success after 3 months is that the algorithm-development dataset and first versions of algorithms are published.

Milestone 3 (duration: 3 months): prepare a prototype suitable for community testing

- Develop a UI for voters to review and adjust their personal project ranking.

- Develop a UI to present the highest ranked projects to the community.

- Develop a UI to organize the review work by the approvers and for publishing of the funding results.

- Analyze and further improve the algoirhtms.

- Prepare documentation a demo presentation of the proof-of-concept to the community.

- Deliverable 3: a demo presentation of the funding-support software and algorithms to the community.

Success after 6 months is that the propotype implementation (algorithms+UI) is ready and presented to the community.

The projected launch date (the time of the demo) is July/August 2022.

Software components developed during first 6 months are to be considered as proof-of-concept versions. The objective is that they are sufficient to conduct the 12-week Test Rapid Fund experiment.

<u>Stage 2 - Raise funds for a quick 12-week Test Rapid Fund and operate it</u>

- Collect feedback from the community after the presentation.

- Deliverable 4: a Catalyst Proposal for a Test Rapid Fund for experimental distribution of funds over 12 weeks (a small % of the funding will be allocated to reward approvers and support operations/improvements to the software during the experiment).

- Make adjustments to the prototype necessary for the feasibility of the Test Rapid Fund proposal.

Success after 12 months is that the Test Rapid Fund is funded and recieves high feedback scores from the comminty after it is operated for 12-weeks.

Team/Experience

The proposer's background and experience: <https://www.linkedin.com/in/alexsierkov/>

Budget use (tentantive)

Below is a high-level overview of the use of funds. Given, it's a unique project, the necessary funds and resources can only be tentatively estimated. From the proposers multi-year experience in building unique ML products, this distribution makes sence and provides confidence that the project can be delivered within the proposed timeline. Further explanations can be found in "Answers to common organization/budget questions".

- Data science work to build the initial learning and ranking algorithms ~ 30%.

- Backend/Data engineering work to collect and keep improving the project dataset (proposal data, historical votes, etc.) ~ 15%.

- Data science work to conduct the analysis of algorithm for bias and other potential issues ~ 10%.

- Consultations with independent experts and additional paid collection of labeled data to imrove models ~ 10%.

- Frontend/UI engineering work to implement prototypes of the user interfaces ~ 10%.

- UI/UX design work to design the prototypes of the user interfaces ~5%.

- Backend/database engineering work to organize the storage of voting and preferences data ~5%.

- Systems administration work to setup development servers, demo instances, ML pipelines, and operate them for 12 months ~5%.

- Documentation and work to create a demo presentation of the project ~5%.

- A project management/organizational work ~5%.

The budget is 85000 EUR (The author is based in Germany), which is $96000.

Deliverables (Yes/No)

- A published dataset suitable for developing of preference-learning algorithms.

- A published open-source implementation of the developed algorithms.

- A published open-source implementation of the user interfaces supporting the rapid funding process.

- A perpetual non-exclusive license to the Cardano community to use and further develop the code. Likely GPLv3 or similar to benefit other open-source projects. More about GPL v3 license: https://fossa.com/blog/open-source-software-licenses-101-gpl-v3/

- A demo presentation to the Cardano community.

- A Catalyst proposal for Test Rapid Fund.

KPIs/Metrics

- Workload on the community: the average time it takes for one approver to evaluate 11 projects.

- Effectiveness of preference learning: the average time it takes for a voter to adjust the personal ranking of projects to be satisfied with the ranking of top 3 projects.

- Real-world feedback: if the Test Rapid Fund is approved, the ratings of the 12 posts that will present the projects funded during each week of the Test Rapid Fund and the discussion of the encountered issues during each week along with the proposed/implemented improvements.

Alignment with the challenge's objectives

- Is it faster? Yes, the proposal presents a practical way to fund projects weekly - an order of magnitude improvement.

- Is it scalable? Yes, this approach can easily scale to thousands of proposals and beyond that.

- Is it transparent? Yes: each voter can verify personal preferences, the community can see the combined preferences, the funded projects are reviewed and rationales and voting are published. Moreover, the source code and the development dataset are open-source.

- Is it accountable? All funding activities are documented and published for the community review. The review phase of funding additionaly checks for the delivery status of previously funded projects by the same proposer.

- Is it impactful? Yes, in addition to accelerating funding, and providing components to optimize Catalyst Funding as too, the underlying technology can help the community to better understand itself because the ML agents can be used to conduct further studies of the community without extra time burden on voters.

Answers to common technical/algorithmic questions

Are human evaluations really transitive and if not will this break pairwise ranking?

- The transitivity in human evaluations depends on a problem. For example:

a) Comparing $ expenses ($1000 vs $100 vs $10) is fully transitive assuming good intentions.

b) Comparing moves in Rock-Paper-Scissors game is not transitive. - In practical cases of e-commerce search (amazon's product search) and information retrieval (google's search) pairwise comparison does work quite well.

- There are algorithms that work under assumptions of weak transitivity (a user can define a stable order of items), they just require more actions from a user. So, it's not a blocker.

- Given the nature of the rapid funding problem - evaluation of the ROI for the community, I'd say that opinions of individual voters' are closer to be transitive (ordering inconsistencies are more likely between different voters than within projects analyzed by one voter), so it's worth the risk to try.

Can we really learn human prerferences using just the plain text of proposals?

There was a noticable progress in the area of Natural Language Processing over the last years: Transformer models, Self-Supervised Learning, etc. This progress allows building robust classifiers (a pairwise comparison model is a classifier) from plain-text inputs and with reduced requirements for labeled data. These are the reasons why now is a good time to test this approach.

Here are two relevant recent papers that can provide more details into the approach:

- ALBERT: A LITE BERT FOR SELF-SUPERVISED LEARNING OF LANGUAGE REPRESENTATIONS:

<https://arxiv.org/pdf/1909.11942v6.pdf> - XLNet: Generalized Autoregressive Pretraining for Language Understanding:

<https://arxiv.org/pdf/1906.08237v2.pdf>

Historical voting data are not pairwise comparisons, can they be really reused to develop algorithms?

It is possible to leverage non-pairwise votes for the algorithm's testing. For example, by making assumptions, such as that voters prefer any project that have voted for over any project that have not voted for. Reusing the exiting voting and proposal data will allow to quicker approach the model development work.

Why the magic number 11 (the number of approvers / best-matching projects)?

The proposal tries to balance between reducing the workload on the community and having enough data and feedback (11*11=221 new votes/feedback items each week) to prevent funding mistakes and to optimize the models during the 12-week Test Rapid Fund. Based on the experiences of running the Test Rapid Fund, the number can be later adjusted.

Answers to common organizational/budget questions

The requested funds constitute a big chunk of the Challenge's budget, does this make sense?

- Due to the deep technical nature of the project, the first reasonable milestone for the community to review the progress and decide on the further investments is an MVP + community demo. Sadly, building the MVP is exactly the key cost driver of the budget.

- At the same time, the proposal presents a solution that can solve all the scaling and processing speed needs, so despite the additional technical risks, it's has a very high ROI.

- The requested budget is very favourable when compared to a budget needed if the proposal developed inside a traditional tech company (Google, Facebook, etc.): a team of 5-6 employees (1-2 data scientists, backend/data engineer, frontend engineer, half-time designer, product manager) funded for 6 months would require ~$550k = (5.5 people * 1/2 year * $200k employee cost per year [salary+stock options+bonuses+office space+equipmet+perks+insurances, etc.]).

- The requested budget is made possible by the proposer's personal experiences and gained know-hows in the field, connections to the global network of talent, and exposure to socially-oriented experts interested in the development of better apporaches to community self-governance.

This is a new/prototype technology, what are the risks?

- There is a chance that learning a perfectly-aligned preference function of each voter with minimal (less than 10) votes can be challenging. Fortunately, due to the nature of the pairwise reformulation of voting, a simple weekly review and adjustment by voters of their top 3 proposals using alternative mechanisms (variants of sampling from all proposals) could already provide sufficient information for a quality community ranking of projects. Moreover, using the votes of all voters to train a whole-community prerfences model can help reduce the number of votes to align the personal preferences models.

- The proposal assumes a reasonably active community participation (at least 20% of wallet owners can respond within 24-hours) to select a team of 11 approvers every week. If that's not the case, some alternative methods of approver selection might be needed, such as requesting to-be approvers to preregister to ranomdly select approvers only from the prequalified list.